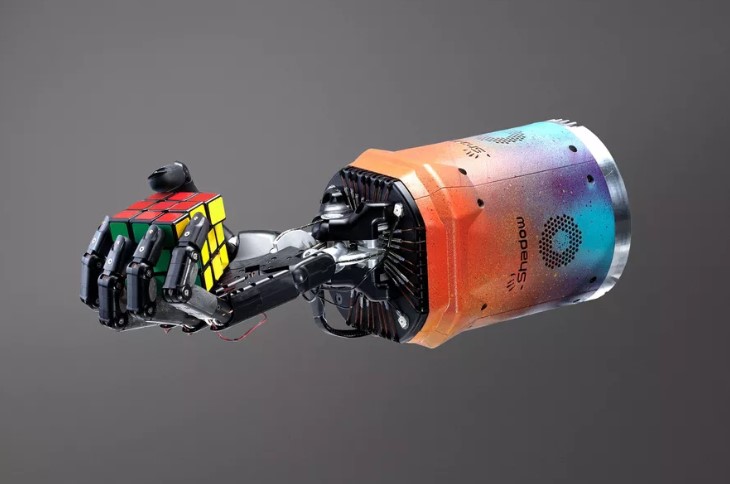

Artificial intelligence research organization OpenAI has achieved a new milestone in its quest to build general purpose, self-learning robots. The group’s robotics division says Dactyl, its humanoid robotic hand first developed last year, has learned to solve a Rubik’s cube one-handed. OpenAI sees the feat as a leap forward both for the dexterity of robotic appendages and its own AI software, which allows Dactyl to learn new tasks using virtual simulations before it is presented with a real, physical challenge to overcome.

In a demonstration video showcasing Dactyl’s new talent, we can see the robotic hand fumble its way toward a complete cube solve with clumsy yet accurate maneuvers. It takes many minutes, but Dactyl is eventually able to solve the puzzle. It’s somewhat unsettling to see in action, if only because the movements look noticeably less fluid than human ones and especially disjointed when compared to the blinding speed and raw dexterity on display when a human speedcuber solves the cube in a matter of seconds.

But for OpenAI, Dactyl’s achievement brings it one step closer to a much sought-after goal for the broader AI and robotics industries: a robot that can learn to perform a variety of real-world tasks, without having to train for months to years of real-world time and without needing to be specifically programmed.

“Plenty of robots can solve Rubik’s cubes very fast. The important difference between what they did there and what we’re doing here is that those robots are very purpose-built,” says Peter Welinder, a research scientist and robotics lead at OpenAI. “Obviously there’s no way you can use the same robot or same approach to perform another task. The robotics team at OpenAI have very different ambitions. We’re trying to build a general purpose robot. Similar to how humans and how our human hands can do a lot of things, not just a specific task, we’re trying to build something that is much more general in its scope.”

Welinder is referencing a series of robots over the last few years that have pushed Rubik’s cube solving far beyond the limitations of human hands and minds. In 2016, semiconductor maker Infineon developed a robot specifically to solve a Rubik’s cube at superhuman speeds, and the bot managed to do so in under one second. That crushed the sub-five-second human world record at the time. Two years later, a machine developed by MIT solved a cube in less than 0.4 seconds. In late 2018, a Japanese YouTube channel called Human Controller even developed its own self-solving Rubik’s cube using a 3D-printed core attached to programmable servo motors.

In other words, a robot built for one specific task and programmed to perform that task as efficiently as possible can typically best a human, and Rubik’s cube solving is something software has long ago mastered. So developing a robot to solve the cube, even a humanoid one, is not all that remarkable on its own, and less so at the sluggish speed Dactyl operates.

But OpenAI’s Dactyl robot and the software that powers it are much different in design and purpose than a dedicated cube-solving machine. As Welinder says, OpenAI’s ongoing robotics work is not aimed at achieving superior results in narrow tasks, as that only requires you develop a better robot and program it accordingly. That can be done without modern artificial intelligence.

Instead, Dactyl is developed from the ground up as a self-learning robotic hand that approaches new tasks much like a human would. It’s trained using software that tries, in a rudimentary way at the moment, to replicate the millions of years of evolution that help us learn to use our hands instinctively as children. That could one day, OpenAI hopes, help humanity develop the kinds of humanoid robots we know only from science fiction, robots that can safely operate in society without endangering us and perform a wide variety of tasks in environments as chaotic as city streets and factory floors.

To learn how to solve a Rubik’s cube one-handed, OpenAI did not explicitly program Dactyl to solve the toy; free software on the internet can do that for you. It also chose not to program individual motions for the hand to perform, as it wanted it to discern those movements on its own. Instead, the robotics team gave the hand’s underlying software the end goal of solving a scrambled cube and used modern AI — specifically a brand of incentive-based deep learning called reinforcement learning — to help it along the path toward figuring it out on its own. The same approach to training AI agents is how OpenAI developed its world-class Dota 2 bot.

But until recently, it’s been much easier to train an AI agent to do something virtually — playing a computer game, for example — than to train it to perform a real-world task. That’s because training software to do something in a virtual world can be sped up, so that the AI can spend the equivalent of tens of thousands of years training in just months of real-world time, thanks to thousands of high-end CPUs and ultra-powerful GPUs working in parallel.

Doing that same level of training performing a physical task with a physical robot isn’t feasible. That’s why OpenAI is trying to pioneer new methods of robotic training using simulated environments in place of the real world, something the robotics industry has only barely experimented with. That way, the software can practice extensively at an accelerated pace across many different computers simultaneously, with the hope that it retains that knowledge when it begins controlling a real robot.

Because of the training limitation and obvious safety concerns, robots used commercially today do not utilize AI and instead are programmed with very specific instructions. “The way it’s been approached in the past is that you use very specialized algorithms to solve tasks, where you have an accurate model of both the robot and the environment in which you’re operating,” Welinder says. “For a factory robot, you have very accurate models of those and you know exactly the environment you’re working on. You know exactly how it will be picking up the particular part.”

This is also why current robots are far less versatile than humans. It requires large amounts of time, effort, and money to reprogram a robot that assembles, say, one specific part of an automobile or a computer component to do something else. Present a robot that hasn’t been properly trained with even a simple task that involves any level of human dexterity or visual processing and it would fail miserably. With modern AI techniques, however, robots could be modeled like humans, so that they can use the same intuitive understanding of the world to do everything from opening doors to frying an egg. At least, that’s the dream.

We’re still decades away from that level of sophistication, and the leaps the AI community has made on the software side — like self-driving cars, machine translation, and image recognition — has not exactly translated to next-generation robots. Right now, OpenAI is just trying to mimic the complexity of one human body part and to get that robotic analog to operate more naturally.

That’s why Dactyl is a 24-joint robotic hand modeled after a human hand, instead of the claw or pincer style robotic grippers you see in factories. And for the software that powers Dactyl to learn how to utilize all of those joints in a way a human would, OpenAI put it through thousands of years of training in simulation before trying the physical cube solve.

“If you’re training things on the real world robot, obviously whatever you’re learning is working on what you actually want to deploy your algorithm on. In that way, it’s much simpler. But algorithms today need a lot of data. To train a real world robot, to do anything complex, you need many years of experience,” Welinder says. “Even for a human, it takes a couple of years, and humans have millions of years of evolution to have the learning capabilities to operate a hand.”

In a simulation, however, Welinder says training can be accelerated, just like with game-playing and other tasks popular as AI benchmarks. “This takes on the order of thousands of years to train the algorithm. But this only takes a few days because we can parallelize the training. You also don’t have to worry about the robots breaking or hurting someone as you’re training these algorithms,” he adds. Yet researchers have in the past has run into considerable trouble trying to get virtual training to work on physical robots. OpenAI says it is among the first organizations to really see progress in this regard.

When it was given a real cube, Dactyl put its training to use and solved it on its own, and it did so under a variety of conditions it had never been explicitly trained for. That includes solving the cube one-handed with a glove on, with two of its fingers taped together, and while OpenAI members continuously interfered with it by poking it with other objects and showering it with bubbles and pieces of confetti-like paper.

“We found that in all of those perturbations, the robot was still able to successfully turn the Rubik’s cube. But it did not go through that in training,” says Matthias Plappert, Welinder’s fellow OpenAI’s robotic team lead. “The robustness that we found when we tried this on the physical robot was surprising to us.”

That’s why OpenAI sees Dactyl’s newly acquired skill as equally important for both the advancement of robotic hardware and AI training. Even the most advanced robots in the world right, like the humanoid and dog-like bots developed by industry leader Boston Dynamics, cannot operate autonomously, and they require extensive task-specific programming and frequent human intervention to carry out even basic actions.

OpenAI says Dactyl is a small but vital step toward the kind of robots that might one day perform manual labor or household tasks and even work alongside humans, instead of in closed-off environments, without any explicit programming governing their actions.

In that vision for the future, the ability for robots to learn new tasks and adapt to changing environments will be as much about the flexibility of the AI as it is about the robustness of the physical machine. “These methods are really starting to demonstrate that these are the solutions to handling all the inherent complication and the messiness of the physical world we live in,” Plappert says.