Researchers at Hong Kong Baptist University (HKBU) have partnered with a team from Tencent Machine Learning to create a new technique for training artificial intelligence (AI) machines faster than ever before while maintaining accuracy.

During the experiment, the team trained two popular deep neural networks called AlexNet and ResNet-50 in just four minutes and 6.6 minutes respectively. Previously, the fastest training time was 11 minutes for AlexNet and 15 minutes for ResNet-50.

AlexNet and ResNet-50 are deep neural networks built on ImageNet, a large-scale dataset for visual recognition. Once trained, the system was able to recognise and label an object in a given photo. The result is significantly faster than previous records and outperforms all other existing systems.

Machine learning is a set of mathematical approaches that enable computers to learn from data without explicitly being programmed by humans. The resulting algorithms can then be applied to a variety of data and visual recognition tasks used in AI.

The HKBU team comprises Professor Chu Xiaowen and Ph.D. student Shi Shaohuai from the Department of Computer Science. Professor Chu said, “We have proposed a new optimised training method that significantly improves the best output without losing accuracy. In AI training, researchers strive to train their networks faster, but this can lead to a decrease in accuracy. As a result, training machine-learning models at high speed while maintaining accuracy and precision is a vital goal for scientists.”

Professor Chu said the time required to train AI machines is affected by both computing time and communication time. The research team attained breakthroughs in both aspects to create this record-breaking achievement.

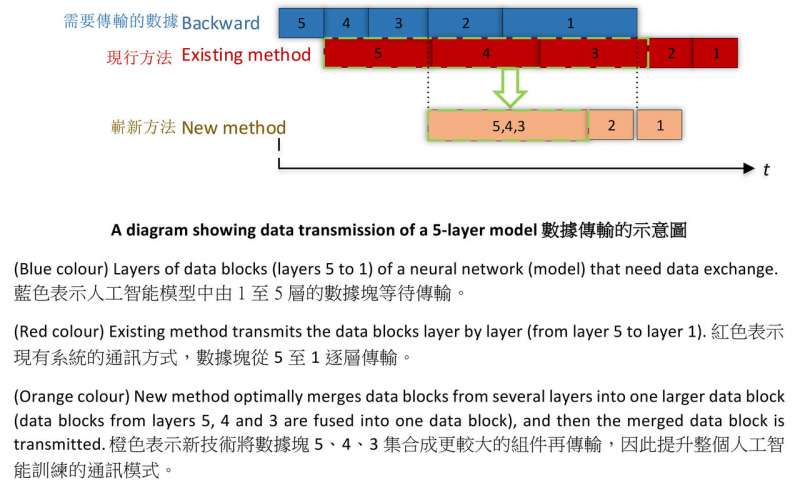

This included adopting a simpler computational method known as FP16 to replace the more traditional one, FP32, making computation much faster without losing accuracy. As communication time is affected by the size of data blocks, the team came up with a communication technique named “tensor fusion,” which combines smaller pieces of data into larger ones, optimising the transmission pattern and thereby improving the efficiency of communication during AI training.

This new technique can be adopted in large-scale image classification, and it can also be applied to other AI applications, including machine translation; natural language processing (NLP) to enhance interactions between human language and computers; medical imaging analysis; and online multiplayer battle games.